I’m still a big believer that humanity still has a huge advantage over AI. There’ve been countless discussions about AI taking jobs, and it will, but at least for now, nothing can replace the expertise that you’ve accumulated over the years.

BCG recently found that AI success is 10% algorithms, 20% technology, and 70% people and process.

Read that again.

Most companies are pouring money into the 30% that matters least. And if you’re honest with yourself, you probably are too.

I know — because I’ve done this myself.

Over the past two years, I’ve cycled through AI tools like someone who keeps buying running shoes but never actually goes running. ChatGPT was the default. Then Claude joined the fray. Last November, Gemini 3.0 showed up and scared the heck out of the others. Since about that time, Claude (imo) has gotten infinitely better. Now there’s Claude Code and Claude Cowork. Huge changes for how we will work with AI.

And those are just general AIs. We’re not talking about graphic design, videos, and even the more technical possibilities.

Each one felt like the answer. Each one came with that familiar rush — this is the tool that changes everything.

And each time, my actual workflow didn’t change a whole lot.

But now things are changing.

Now we can see why our way of working hasn’t changed much. But let’s first look at some data, because how organizations adopt AI says a lot about how we do as well.

The Expensive Experiment Nobody Talks About

Zylo’s analysis of 30 million SaaS licenses found that companies use only 49% of what they’re paying for — leaving an estimated $18 million in annual waste per company. They call it the “license graveyard.” Fitting.

And the graveyard is growing. AI-native tool spending grew 75% year-over-year in 2024. ChatGPT jumped from the 14th to the 2nd most expensed app in a single year. The money moved fast. The workflows didn’t.

According to BCG, 74% of companies are stuck in what many are calling pilot purgatory — endless experimentation with no scaled results. Only 26% have figured out how to move past pilots and deliver real, measurable ROI from AI.

It gets worse. S&P Global found that 42% of companies scrapped the majority of their AI initiatives in 2025. That’s up from 17% a year earlier. That’s not a learning curve. That’s a bonfire.

From the looks of it, most AI tools aren’t failing; they are simply getting abandoned because people aren’t using them. And it doesn’t matter whether it’s AI or another SaaS tool. If behavior change doesn’t come, then it’s probably not going to work.

Long story short: the tools aren’t the problem. The tools are better than they’ve ever been. The problem is how we choose them and what we focus on.

You’re Solving a Technology Problem That Isn’t a Technology Problem

Gartner calls it the “core misdiagnosis” — treating AI as a technology problem when it’s actually an operating model problem.

Go back to that BCG finding. The 10-20-70 rule. If 70% of AI success comes down to people, processes, and how your organization actually works — then buying another subscription is like buying another pair of running shoes when you don’t have a training plan.

According to BCG, the leaders who win with AI “fundamentally redesign workflows.” The ones who don’t? They “just try to automate old, broken processes.”

That distinction matters more than any tool comparison.

As one respondent put it in the 2024 Content Marketing Institute benchmark study: “AI will continue to be the shiny thing until marketers realize the dedication required to develop prompts, go through the iterative process, and fact-check output. AI can help you sharpen your skills, but it isn’t a replacement solution.”

AI doesn’t replace clarity. It amplifies whatever’s already there. If you’re clear, AI makes you faster. If you’re unclear, AI makes you efficiently unclear. If your process is broken, AI gives you faster chaos.

When the Tech Works but the Organization Doesn’t

Let’s look at this through the lens of a large enterprise organization. Whether it’s an organization or a person, the same rules apply. I came across an interesting write-up about Ford’s commercial vehicle division. They built a predictive maintenance AI that could anticipate 22% of certain mechanical failures. The technology worked. Full stop.

Then it hit reality. Integration issues with legacy dealer systems. Inconsistent adoption across dealerships. No clear ownership of the workflow. The AI was ready. The organization wasn’t and the project stalled after pilots.

A technically successful AI model — killed by unclear process and unaligned stakeholders.

Now, that’s just one example. But the pattern repeats everywhere companies implement technology without taking care of the people, the purpose, the communication, and all of the things necessary to make that implementation a success. The tech is rarely the bottleneck. The organization is.

If you’re a marketing leader, this probably sounds familiar.

The Pressure Problem

Here’s what makes this harder for you specifically.

ON24’s survey of 500+ B2B marketers found that 72% of executives are asking their employees how they plan to use AI. That’s not curiosity — that’s pressure. And when leadership asks that question, the natural response is to buy something. Subscribe. Demo. Implement. Show you’re doing something.

The result? Tools purchased to look proactive, not to solve problems. And when those tools don’t deliver — because nobody mapped the workflows, trained the teams, or defined what success looks like — you’re left with a credibility gap. The board wanted ROI. You delivered shelfware. And now you’re the one explaining why the “AI strategy” didn’t produce results.

BCG’s research shows that only 11% of companies realize significant value once an AI initiative stalls for more than six months. The clock starts ticking the moment you subscribe.

The Hype Phase Is Ending. The Clarity Phase Is Starting.

But there’s good news. The market is correcting.

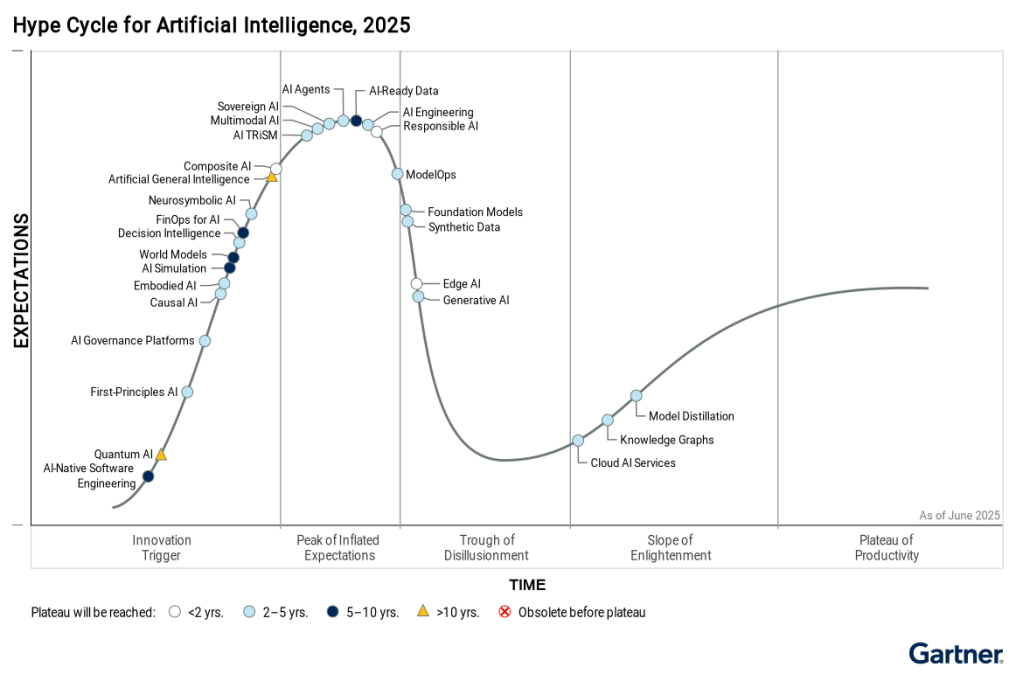

Gartner’s Hype Cycle now places generative AI squarely in the Trough of Disillusionment — past the Peak of Inflated Expectations, heading toward what actually works. The hype phase is ending. The clarity phase is starting.

And the evidence shows up in who’s winning.

McKinsey’s latest global AI survey expects a shift towards agentic AI. With that shift, planning and governance become even more crucial than before.

With AI products evolving in this direction, you can see the shift. Claude Code requires you to define a project structure, provide context, and articulate what you’re building. Cowork asks for clear task definitions, specific goals, explicit constraints. These tools don’t reward vague prompting. They reward clarity.

The product design has shifted from “ask AI anything” to “tell AI exactly what you need.” And the people who bring structure — who know their process, their audience, their gaps — are getting far more value than those who don’t.

I’ve seen this firsthand with my own newsletter workflow. It’s a workflow where I can see that having this process is going to allow me to automate much of the process and keep what I enjoy doing. All with having the key human-in-the-loop governance.

My newsletter has a clear structure: research, outline, draft, edit, format, schedule. The repetitive parts — initial research organization, formatting, scheduling — those have become AI tasks. The creative parts — writing, voice, editorial decisions — I still do. But even some of these I’m testing to see how far this workflow can be pushed.

Now, that structure leans heavily on the fact that I’m deeply familiar with the editorial process. And this is actually where AI is heading. Understanding what the outcome should look like and what a great process is gives you a massive head start — because you know how to plan for success. You’re not guessing at prompts. You’re directing a workflow you’ve already mastered.

That principle applies whether you’re running a newsletter, an accounting workflow, or a full marketing operation. Here’s a simple workflow for you to get started.

The AI Friction Audit

Here’s the framework that I am thinking about, almost every time I open an AI.

Three steps. Fifteen minutes. Zero new tools required.

Step 1: Map Your Friction

List five tasks you do every week that drain your energy or eat up your time. Be specific. Not “content creation” — that’s too vague. Try “writing the first draft of the weekly email” or “reformatting the slide deck for the leadership meeting” or “pulling engagement data from three different platforms into one report.”

The rule: if it drains you AND it’s repetitive, it’s a candidate.

Your 5-minute action: Open a blank doc. Set a timer. List everything you did last week that felt like a drain. Don’t filter. Just dump.

Step 2: Score for Clarity

For each task on your list, ask one question: “Could I explain this task to a stranger in one sentence?”

If yes, it’s ready for AI. The process is clear enough that a tool could actually help.

If no — you have a clarity problem, not a tool problem. And no subscription will fix that.

This is where most people discover the gap. “Create social media content” is too vague for any AI tool to help you meaningfully. “Turn each newsletter into three LinkedIn posts using the hook-insight-CTA structure” is specific enough to actually work.

Most leaders find that two or three of their “AI opportunities” are actually clarity problems in disguise. The process isn’t defined well enough for themselves, let alone for a tool.

Your 5-minute action: Take your top three drains from Step 1. Write a one-sentence description of each. If you can’t — that’s your clarity gap. Fix that before you buy anything.

Step 3: Start With One

Pick one task from your scored list. One.

Find or use one AI tool that addresses that specific friction. Build the workflow. Test it for two weeks. Measure one thing: did it actually save time?

Only then move to the next.

The rule: never subscribe to a new AI tool until you’ve fully integrated the last one. This single constraint would have saved me hundreds of dollars and months of tool-hopping.

Your 5-minute action: Pick your one task. Don’t research tools yet. First, write down exactly what the workflow looks like today — each step, in order. That document is your implementation plan. The tool comes last.

The Point

My AI tool graveyard taught me something I should have known from the start.

The tool was never the problem. I was. I didn’t have a clarity problem with AI. I had a clarity problem with myself — what I needed, what drained me, what my actual workflow looked like beneath all the improvising.

The moment I got clear on that, the right tools found me. Not the other way around.

This is more important than ever now. When I see the way things are going with Claude Code and Claude Cowork, that plan that you have, the processes, those SOPs, all of those will be the real foundation for driving real efficiency.

AI isn’t the point. Clarity is the point. Get that right — and the right tools will find you.

A: Most AI adoption fails because organizations treat it as a technology problem when it’s actually an operating model problem. BCG’s 10-20-70 rule shows that AI success is only 10% algorithms and 20% technology — the remaining 70% comes from people, processes, and organizational readiness. Companies that skip workflow redesign and jump straight to tool adoption end up with shelfware, not results.

A: AI pilot purgatory describes the state where organizations run endless AI experiments without scaling past the pilot stage. BCG found that 74% of companies are stuck here — testing tools that show promise in controlled settings but never delivering measurable ROI at scale. The primary cause is launching pilots without clear processes, success metrics, or organizational alignment.

A: Start by mapping your friction — the specific, repetitive tasks that drain your energy and time. Then test each task for clarity: can you explain it to a stranger in one sentence? If yes, it’s ready for AI. If no, you have a clarity problem, not a tool problem. Only after defining the specific friction should you evaluate which tool addresses it. The AI Friction Audit framework helps leaders stop collecting subscriptions and start integrating tools that actually stick.

Most AI tools don’t fail — they get abandoned. Companies are spending on the 30% that matters least (algorithms and technology) while neglecting the 70% that drives success (people and process). The fix isn’t more tools — it’s more clarity. The AI Friction Audit helps leaders map their friction, test for clarity, and integrate one tool at a time instead of collecting subscriptions.